- Damini Sinha

Gatsby is an open-source framework that combines functionality from React, GraphQL and Webpack into a single tool for building static websites and apps.

The Amazon OpenSearch Service is a managed service that makes it easy to deploy, operate, and scale OpenSearch in the AWS Cloud. OpenSearch is a popular open-source search and analytics engine for use cases such as log analytics, real-time application monitoring, and click stream analytics.

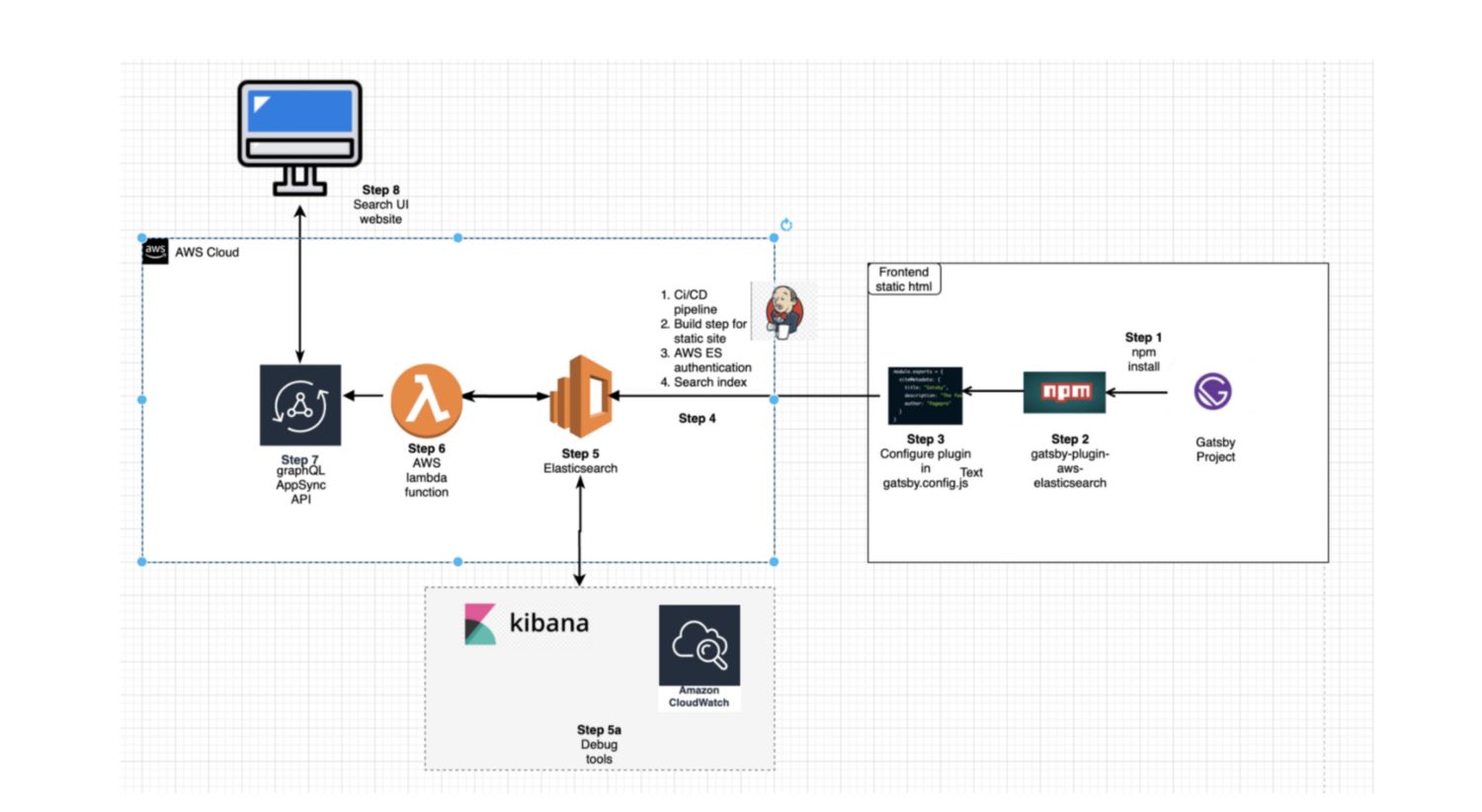

Simple diagram showing steps taken to achieve connection from gatsby project (frontend) to AWS OpenSearch

Setup Gatsby project (Step 1)

Useful link for me to setup a Gatsby project.

Install gatsby plugin — gatsby-plugin-aws-elasticsearch (Step 2)

First, install the package using npm or yarn.

yarn add -D gatsby-plugin-aws-elasticsearchnpm install --save-dev gatsby-plugin-aws-elasticsearchresolve: `gatsby-plugin-aws-elasticsearch`,

options: {

endpoint: process.env.ELASTIC_AWS_ENDPOINT,

//to toggle the synchronisation enabled: true,

recreateIndex: true, //A GraphQL query to fetch the data. query: ` query {

nodes{

edges {

node {

title

name

age

id

properties {

customJob

}

contentId

}

}

}

}`,

//A function which takes the raw GraphQL data and returns the nodes to process.selector: (data) => {

return data.nodes.edges

},

//A function which takes a single node (from selector), and returns an object with the data to insert into Elasticsearch.toDocument: (node) => ({

id: node.id,

title: node.title,

name: node.name,

age: node.age,

properties: node.properties.customJob,

contentId: node.contentId

}),//The name of the index to insert the data into. If the index does not exist, it will be created.index: 'example-test',//An object with the mapping info for the index.mapping: {

title: { type: 'keyword' },

name: { type: 'text' },

age: { type: 'text' },

properties: { type: 'nested' },

contentId:{ type: 'keyword' }

},//The AWS IAM access key ID.accessKeyId: process.env.ELASTIC_AWS_ACCESS_KEY_ID,//The AWS IAM secret access key.secretAccessKey: process.env.ELASTIC_AWS_SECRET_ACCESS_KEY

}

Tweaks done in plugin to establish the connection between Gatsby project to AWS OpenSearch (Step 4)

Since I cloned the Gatsby plugin — gatsby-plugin-aws-elasticsearch in the project wanted to mention the below tweaks which resolved some of the issues I encountered.

Some of the files of the plugin required changes which are as follows

Updated a bit of the logic around passing credentials param in api.ts (plugin file) which Signs a request with the AWS credentials.

Issue faced — The request was not going through and was receiving Forbidden 403 in response from AWS

Resolution — Sent credentials param in sendRequest function and signRequest function

export const sendRequest = async <Request, Response>(

method: 'GET' | 'PUT' | 'POST' | 'DELETE',

path: string,

document: Request,

options: Options,

language = 'no',

credentials: AWSCredentials

): Promise<ResponseOrError<Response>> => {

.

.

.

const headers = signRequest(request, credentials);

2. Adding sessionTokens in options.ts (plugin file) which explicitly tells about the configuration available for plugin

Issue faced — There was authentication issue with available credentials option since they are temporary

Resolution — Added sessionTokens in OptionsStruct object

... accessKeyId: string(),

secretAccessKey: string(),

sessionToken:string(),...

3. Returning toDocument in index.ts which is the main file

Issue faced — During project build time while debugging toDocument comes as undefined eventhough configuration provided is correct

Resolution — return toDocument

const nodes = options.selector(data).map((node) => {

return options.toDocument(node);

});4. Adding Throttling request logic in api.ts (plugin file)-updated the code for handling 429 response (exponential backoff)

Issue faced — Sometimes the request to AWS was not going through in first go and seemed alright in second go. Got status code as 503 , 429 and 502 eventhough AWS services were up and running

Resolution — Added throttling request logic to handle the above error (exponential backoff) inside getResponse function

if (response.status === 429 || response.status === 503 || response.status === 502) {

let counter = 1;

const delay = (ms: number) => new Promise((resolve) => setTimeout(resolve, ms));

//Exponential backoff

while (counter <= 3) {

await delay(3000 * counter);

response = await getResponse();

counter++;

if (response.status !== 429 && response.status !== 503

&& response.status !== 502)

{

break;

}

}

}

Connection to OpenSearch established (Step 5)

After these configuration and tweaks, the connection AWS should be successful, for my project I was able to verify with CI/CD build pipeline.

Next bits gets more exciting as we move towards AWS services…

AWS Lambda function (Step 6)

Lambda is a compute service that lets you run code without provisioning or managing servers.

Lambda runs instances of your function to process events. You can invoke your function directly using the Lambda API, or you can configure an AWS service or resource to invoke your function.

This Node.js code snippet did the trick for me which consists of async functions with some logs. Lambda console can be used for debugging the functions first, until you start getting the response body and status code as 200.

Below is the code snippet where appending request headers helped me alot to get the proper response.

const getQueryResults = (searchString, domain) => new Promise((resolve, reject) => {

var endpoint = new AWS.Endpoint(domain);

var request = new AWS.HttpRequest(endpoint, region);

request.method = 'GET';

request.path += `indexName/_search`;

request.body = `{

"query": {

"multi_match": {"query": "${searchString}",

"title": "${searchString}"

}

}

}`

request.headers['host'] = domain;

request.headers['Content-Type'] = 'application/json';

request.headers['Content-Length'] = Buffer.byteLength(request.body).toString();

var credentials = new AWS.EnvironmentCredentials('AWS');

var signer = new AWS.Signers.V4(request, 'es');

signer.addAuthorization(credentials, new Date());

var client = new AWS.HttpClient();

client.handleRequest(request, null, function (response) {

console.log(" request ",request);

var responseBody = '';

response.on('data', function (chunk) {responseBody += chunk;});

response.on('end', function () {resolve(responseBody);

});

},

function (error) {

reject(error);

})

})

const AWS = require("aws-sdk");

const SSM = require("aws-sdk/clients/ssm");

const getSsmValue = async () => {

const data = await SSM.getParameter({

Name: `DomainSsmParameterName`,

}).promise();

const domainResponse = { statusCode: 200, body: data };

return domainResponse;

};

var region = "aws-region";

exports.handler = async function (event, _context, callback) {

const domainResponse = await getSsmValue();

if (domainResponse) {

let domain = domainResponse.body.Parameter.Value;

console.log("Received event: ", JSON.stringify(event, null, 2));

const results = await getQueryResults("Example", domain);

callback(null, JSON.parse(results));

} else {

console.log(

"Error while fetching ssm parameter value `DomainSsmParameterName`"

);

}

};

graphQL Appsync API (Step 7)

GraphQL APIs built with AWS AppSync give front-end developers the ability to query multiple databases, microservices, and APIs from a single GraphQL endpoint. There are few steps to achieve this which can be followed here

Design your schemas

Attaching datasource — for me the datsource search is AWS Lambda functions mentioned in Step 6

Attaching resolvers

Retrieve Data with a GraphQL Query

Attaching resolvers could get bit tricky, here is an example based on above schema.

Request mapping template — Resolver

#** RequestMapping array

The value of 'payload' after the template has been evaluated

will be passed as the event to AWS Lambda.

*#

{

"version": "2017-02-28",

"operation": "Invoke",

"payload": {

"field": "search",

"query": $util.toJson($context.arguments.query)

}

}

## Declare an empty array as response mapping array

#set( $result = [])

## Loop through results

#foreach($entry in $context.result.hits.hits)

## Add each item to the result array

$util.qr($aemResult.add(

{

"title" : $entry.get("_source")['title'],

"age" : $entry.get("_source")['age'],

"name":$entry.get("_source")['name']

}))

#end

#set ($res = { "aemResult": $result } )

## Parse the result

$util.toJson($res)

Now that there is a record in your database, you’ll get results when you run a query. One of the main advantages of GraphQL is the ability to specify the exact data requirements that your application has in a query. You will start getting the result in form of json object.

const nodes = options.selector(data).map((node) => {

return options.toDocument(node);

});

Testing with UI Search component (Step 8)

Assuming that UI components are ready and connected to graphQL Appsync API, you will start getting the search result like following. Done.

If you have read this far, you definitely deserve a cookie. Don’t forget to clap and share. Thank you.