- Oleh Svintsitskyy

What is this about?

Continuous Integration setup in Azure DevOps. Specifically, approach and motivation behind organization of build pipelines.

Following requires basic understanding of continuous integration concepts used in Azure DevOps like pipeline, stage, job, step.

Why?

- Modernization/migration of existing portfolio built on legacy tech stack and infrastructure into Azure cloud platform.

- Having set up and maintained CI/CD pipelines together with supporting infrastructure during my previous assignments using different tools and tech stack, I decided to take a challenge and do it from scratch in Azure DevOps utilizing my previous experiences.

What do I have?

- Legacy CI built on top of Team Foundation Server Build Service that was done a very long time ago and is hard to understand

- Set of .net and node-based projects

Where do I want to be?

The goal is to create a setup that is:

- Reusable

- Flexible and extendable

- Easy-to-understand

- With fast feedback to developers

- Requires minimal maintenance effort

- Not stored together with the source code that it will be used on but is under version control

- Robust and troubleshootable

Two last points to mention:

- I would like to start by defining a process and use a tool to implement it, rather than build a process around a tool

- I would like to stay as vanilla as possible so it’s easy to understand for others

How do I get there?

Classic or YAML

Both:

- YAML - primary way, as it provides more flexibility and preserves history.

- Classic - playground, as they are quicker to set up and can generate YAML, which is handy when trying new things.

I ended up with one classic pipeline containing set of jobs that target different projects where I connect different repositories when needed.

Reusability

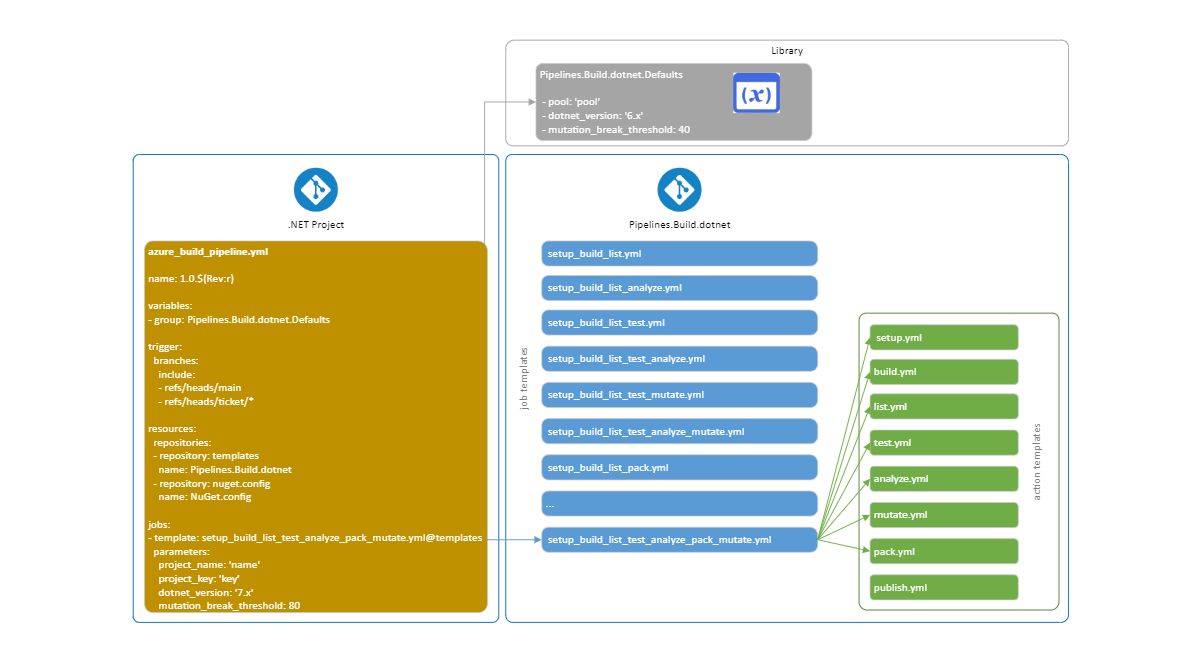

Main principles behind organization are Single Responsibility and DRY (Don't Repeat Yourself). Aside from having pipelines under version control I would like to avoid doing ‘blind copy/pasta’ across different pipelines for ‘reusability’, which leaves me with an obvious choice of using templates that would be stored in a dedicated repository (they might be even stored in a dedicated DevOps project). The projects I work with are either .net or node-based, resulting in a dedicated group of templates for each stack that are placed in separate repositories.

Process

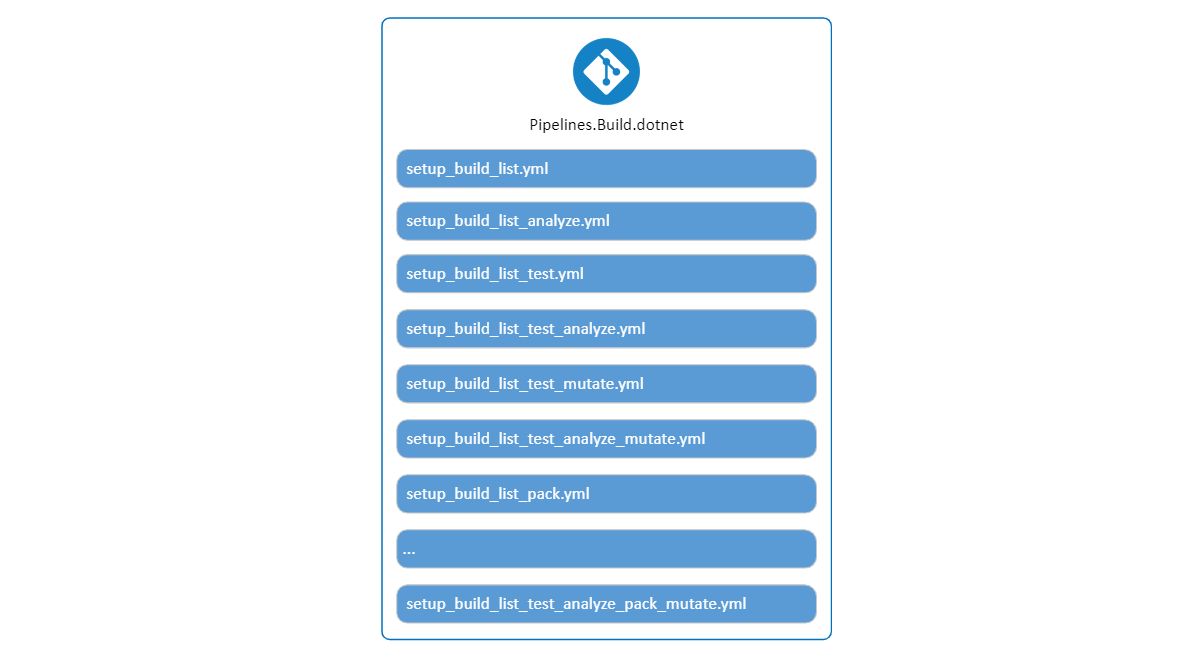

The process that would be defined in the template is something that already exists and consists of the set of sequential actions that are applied on each project/deliverable. For .net based projects that I work with list of actions include:

- Build

- Test

- Analyze (perform static code analysis using SonarQube)

- Pack (for NuGet packages)

- Publish (for web apps/apis)

List is further extended with following actions:

- Setup: steps used to set up infrastructure for the rest of the pipeline: which .net sdk to use, NuGet feed authentication etc.(will be always the first one in the sequence)

- List: light version of software composition analysis using built-in tools as described here

- Mutate: mutation testing that I’m starting to utilize

There is a list of actions, but each project has unique needs requiring different combinations. This is where my experience says that it would be more efficient to create a template for each combination rather than having a single ‘monster’ template that tries to meet every project's need as it will introduce unnecessary noise and will be harder to follow and maintain. Plus, unused combinations can be dropped any time with no extra effort.

Each group of combinations will have the same set of actions used in the same sequence as the start (setup - build - list) reducing the total number combinations.

Another argument for having a set of ready to be used templates is that during modernization/migration phase continuous integration can be introduced early and start simple, with ability to extend it by switching to a different template as work progresses (tests are fixed, access acquired, etc).

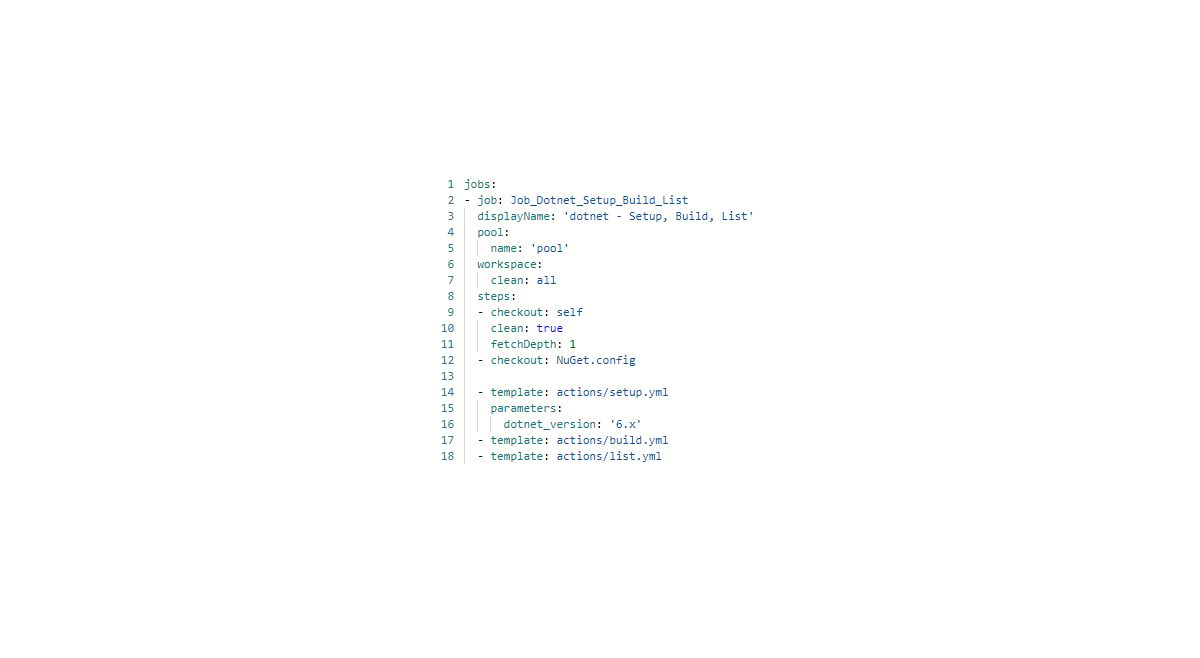

Since each combination is a set of sequential actions it fits perfectly to be used within a job.

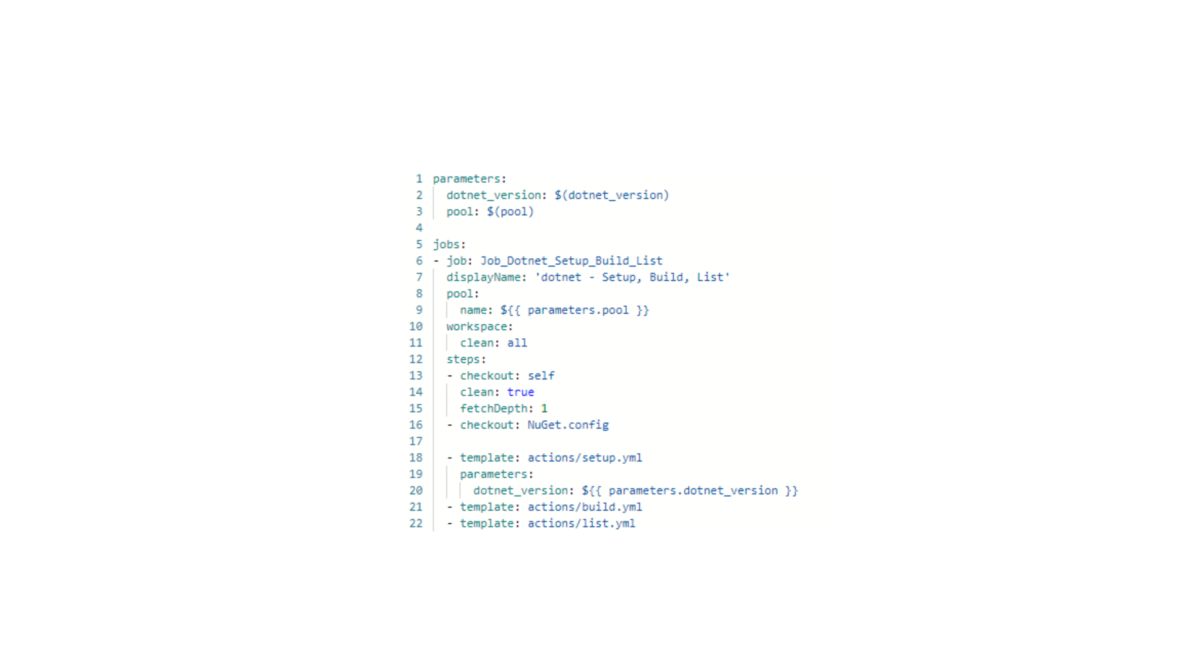

The definition of each action follows Single responsibility and DRY principles that result in another set of nested templates that are placed in a subfolder and are referenced from the root templates.

Actions

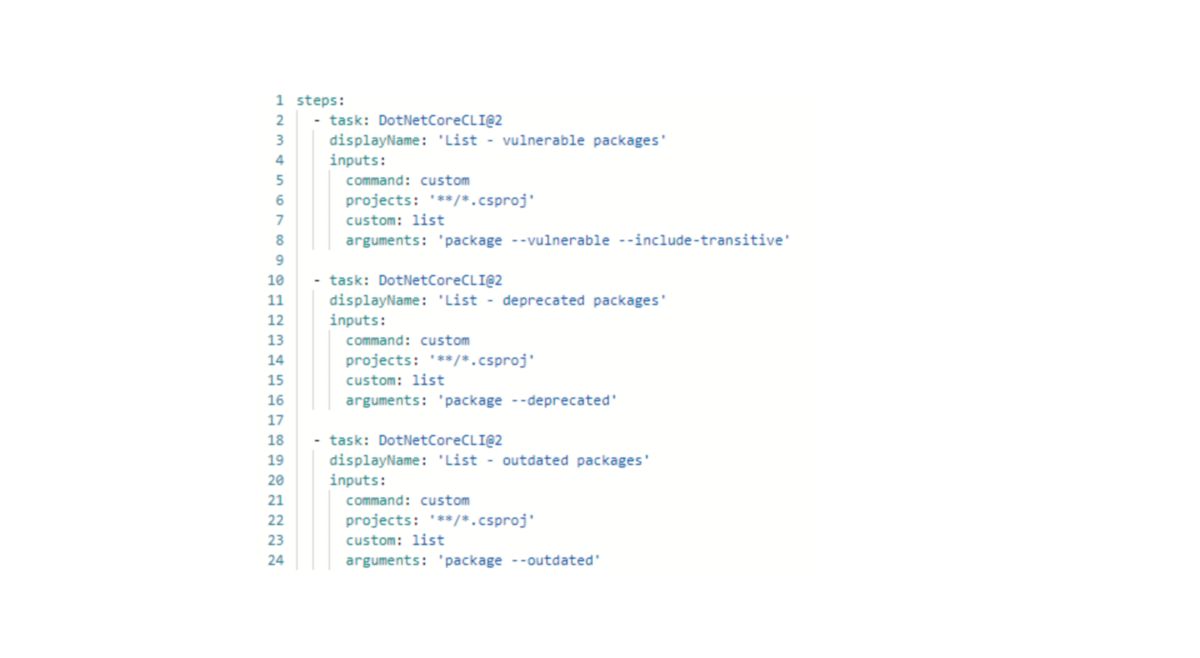

Actions do not match 1:1 with steps and serve as containers for a group of steps:

- Setup: use .net sdk, authenticate with feeds

- Build: restore dependencies then build

- List: list vulnerable, list deprecated and list outdated dependencies

- Analyze: we use SonarQube for static code analysis that results in three different tasks with necessity to break them into two separate actions (analyze_prepare and analyze) as SonarQubePrepare task must be run before compilation and analysis after running tests

- Mutate: includes installation of the tool, using it to run mutation testing, and then publishing report

Example of how list action yml looks like:

Fast Feedback and Easy to Understand

Each combination of actions is kept within a single job to avoid additional repo checkouts or sharing anything with the next job/stage via pipeline artifacts. This is why root templates are used to define jobs and action templates are used as building blocks for them. Stages are not used as they serve as containers for jobs, which is something that I’m trying to avoid within build pipeline (or 'build' part of the pipeline).

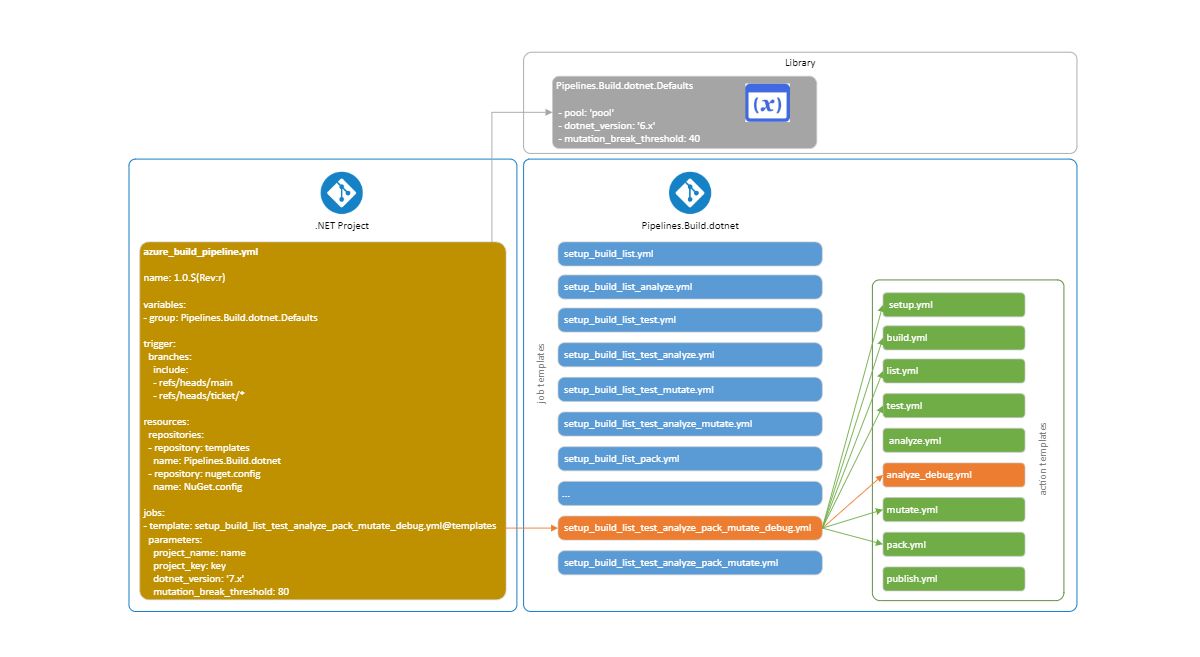

Flexibility

I would like to have the option of adjusting the process to meet specific project needs if/when necessary with minimum effort and no distrurbance for existing pipelines or templates. For example, the 'mutate' action can take considerable amount of time for large projects. Since, as of today, its feedback is used as 'For Your Information, No Immediate Action Required', I would like to have flexibility of still using it but maybe within a separate job targeting a low-priority pool or as a separate pipeline with a scheduled trigger during non-office hours so it’s not affecting other pipeline runs.

Even if there will be a need to onboard a project that has multiple deliverables (for example multiple NuGet packages in one solution) then I can still define the job in the build pipeline definition and reuse action templates there.

Global default values

Since Single Responsibility and ‘Don’t Repeat Yourself’ principles are used everywhere then any defaults that are common to multiple job templates (for example the version of .NET SDK, pool to be used, different types of thresholds, etc.) will have to be moved out and stored somewhere else. Variable groups fit perfectly for that purpose as they can be shared across pipelines and are used to store global defaults with a dedicated variable group for each group of templates.

Default values should be overridable which is done by using variable group’s variable value as default value for a parameter inside the job.

Transforming above referenced job template into:

Pipeline Definition

Each repo with project/deliverable creates a yaml-based pipeline definition that connects everything together:

- Defines naming/versioning

- References appropriate variable group with global defaults

- Defines triggers

- Specifies repositories to be used

- References job template/s to be used

- Provides overrides for the global defaults and other parameters

Robust and Troubleshootable

Inevitably, things will break or will need to be changed (adjusted, extended, upgraded). To minimize disruption of existing working pipelines (other colleague’s work) I would like to:

- have a plan how to effectively troubleshoot

- have some kind of a ‘health check’ that is able to catch yaml formatting mistakes and integration with external tools that are used in templates

- know where templates are used to effectively communicate breaking changes or know where to fix them

Troubleshooting is addressed by creating a copy of the template or whole chain and using it for troubleshooting. Once the issue is resolved, changes are copied into the live template. I find this approach simpler compared to when doing it through a ‘debug’ branch.

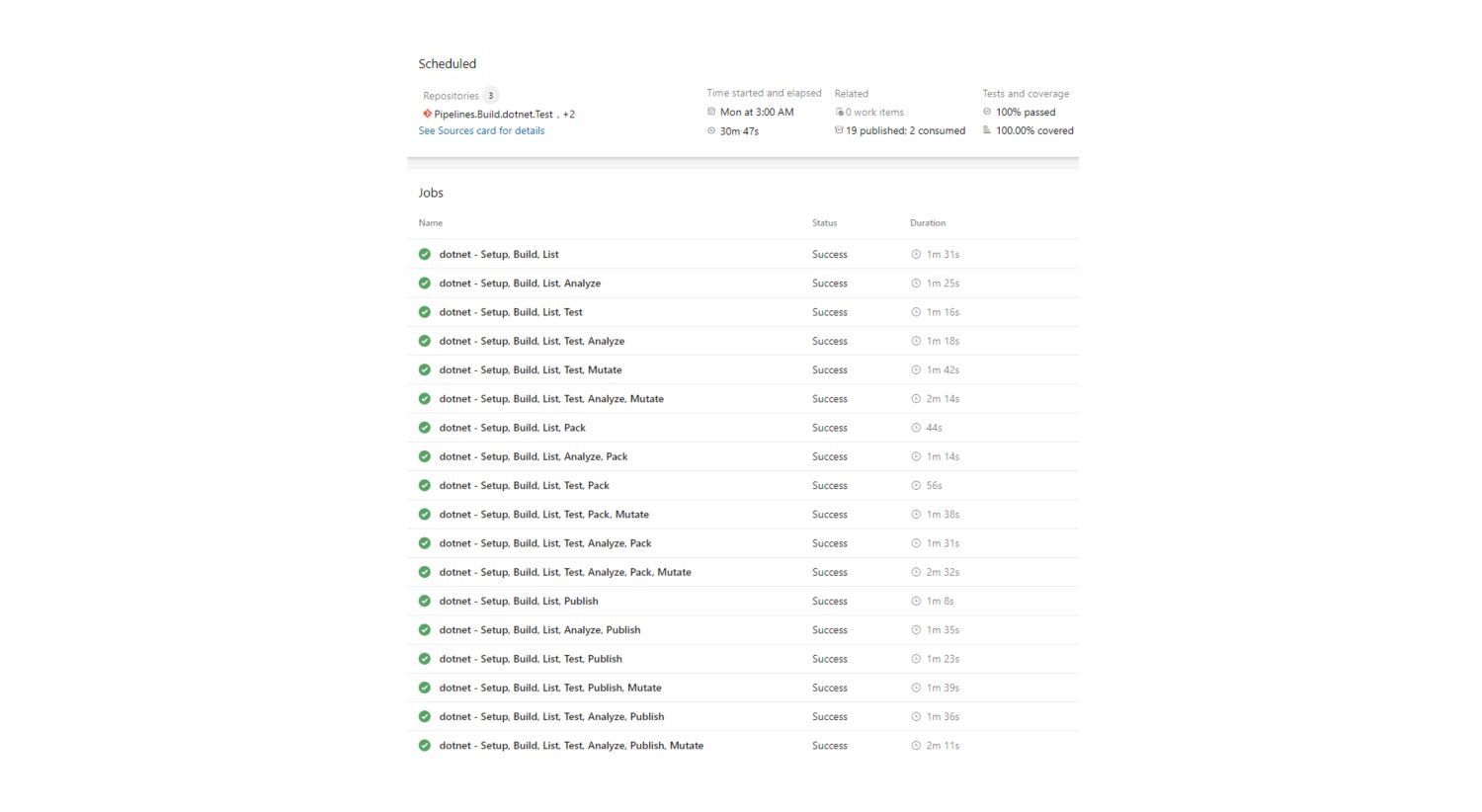

'Health checks'

Since templates are shared, I would like to proactively catch any yaml formatting mistakes (not everybody is editing yaml using editor) or integration issues with external tools. To address it, one more set of repositories is created containing a simple project that can use all actions and a ‘health check’ build pipeline definition that is referencing all available job templates for that group. These specialized pipelines are triggered once per day during non-office hours, reducing bug lifetime to a maximum of one day.

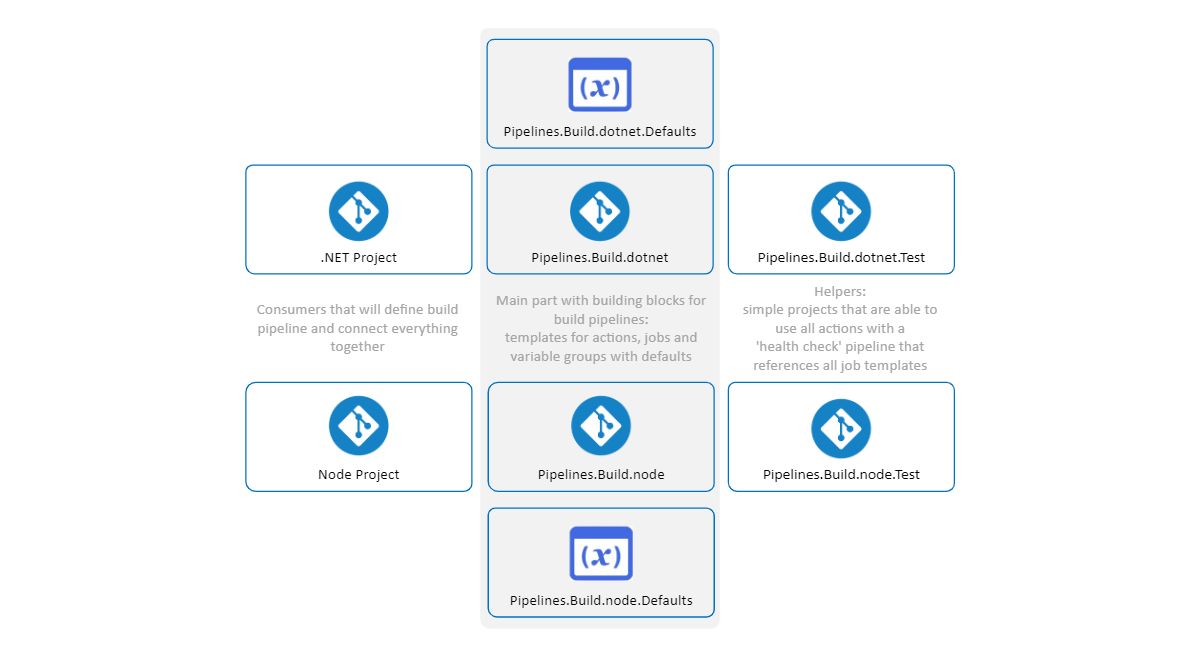

The final infrastructure setup:

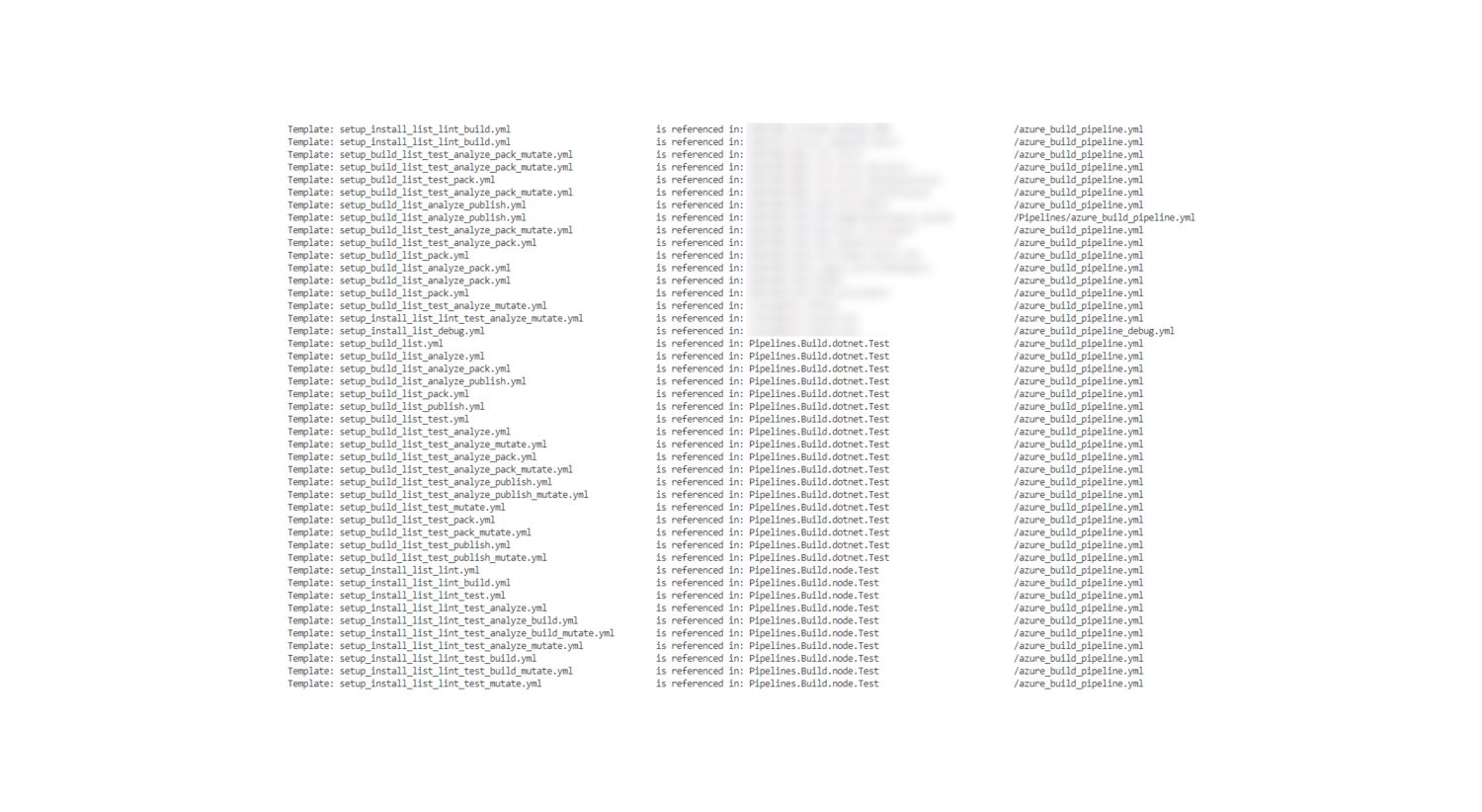

Pipelines - Templates map

To communicate any breaking changes or fixing them myself I need to know where templates are used. I tried to do it by maintaining a static map of all connections between pipelines and templates and it did not work very well. So, I decided to use Azure DevOps Repositories Rest API and have a live map instead.

Creating a .net project just for that would be too much. Instead, a simple PowerShell script would fit here perfectly. Now, when I need to communicate or make changes myself, I know exactly where they must be applied.

Couple of final thoughts

- Can this approach solve any project's needs? No. It's a 'low-hanging fruit' approach that works best for projects with single deliverable (NuGet package, web/windows app/api/service)

- Is the setup flexible and robust enough. Yes. I do think so. By continually refining and adapting, it can serve as a foundation for efficient modernization and migration of our projects to the Azure cloud platform ensuring a robust and streamlined continuous integration process.